The tagline for this blog is “musings on the science of holistic wellness.” I reference “science” because in each article I try to integrate some of my own studies, experience and observations with those of researchers or scientists in the fields of psychology and positive psychology.

The tagline for this blog is “musings on the science of holistic wellness.” I reference “science” because in each article I try to integrate some of my own studies, experience and observations with those of researchers or scientists in the fields of psychology and positive psychology.

The problem is that the general public associates “science” with “facts.” And they assume if something was “found” in a research study, it is “proven.” But most scientists would be the first to tell you that this is hardly the case. In fact, the modern publication process for scientific studies is fraught with biases and potential for error (especially in the social sciences where controls are harder to establish and measure.)

Because of the room for error, most scientists routinely call for “replications” of important studies before too much weight is given to them. If a study can be repeated by different researchers and still achieve the same results, the findings would have a lot more validity.

The problem is that most researchers don’t want to spend their time attempting to rehash work that has already been done. The fame and glory (usually through publication in a respected research journal) comes from forging a new path and testing original ideas.

The Center for Open Science recently tried to address this issue by getting 270 different researchers from across 5 different continents to work on replicating the findings of 100 different psychology studies already published in prominent journals. The results were less than comforting.

In the originally published studies, 97% had statistically significant results (highlighting one of the major biases—towards significant findings—in the publication process) compared to only 36% in the replications—a shockingly large discrepancy.

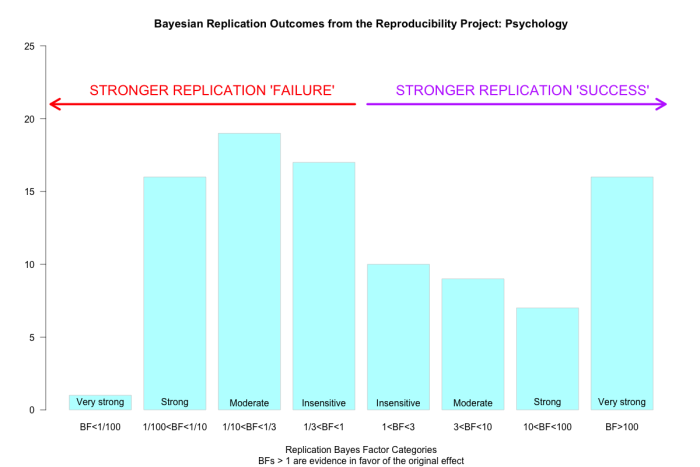

Science and statistics blogger, Alex Etz, argued that these statistics (as reported in major media outlets such as Newsweek and NY Times) are misleading. By doing a “Bayesian” analysis of the findings he finds that 25% of the studies showed strong replication success and 20% of the studies showed strong replication failures. 30% of the replications yield inconclusive results and the remaining 25% showed moderate results that should be taken with a grain of salt.

As Etz is quick to point out, this paints a “slightly less grim picture” and still does not give us a resounding endorsement of the study of psychology as it is practiced today.

My hope would be that these findings give all of us a bit more license to be skeptical of research findings. In a 700 word blog or magazine article, results are often summarized as “researchers found this” or “a study showed that.”

I think of research findings as interesting ideas to try on and see how they fit into your own personal experience of the world. If you are really curious about a study, go directly to the original paper (you might find it on Google Scholar, in a University library database, or you can often request a copy directly from the researcher.) By going to the source, you might find more context to help you understand the results. Often, you might find that a blog or website misinterprets or mischaracterizes the results to make a pithier headline.

Science is not as foolproof as we tend to think it is. So when you see something is “evidence based” or “supported by science” don’t let your guard down and don’t be afraid to be skeptical. You can’t trust everything you read in a psychology blog (not even this one.)

by Jeremy McCarthy

Follow me on twitter, facebook or instagram.

Really good explanations and telling science as it is, complicated and always morphing realities. Well done!